����/2020-7-23�������5��ʬ

2020-7-12

�����CO2ǻ�٤���������³³

��

���������CO2ǻ�٤��������ס��������CO2ǻ�٤���������³�פ�³����

�������CO2ǻ�٤�Lametric Time��ɽ���Ǥ���褦�ˤʤä������������Ĥ��Ǥ��ͤο�ܤ�դǸ������ʤä���

�������Ǻܤ���������ץȡ�room.py�ˤϡ�cron��5ʬ�����˼¹Ԥ��Ƥ���Τ����������Ȥ��ơ����������١�CO2ǻ�٤�room.log�ؽ��Ϥ��Ƥ��롣

*/5 * * * * cd /home/pi/lametric/;./room.py >> /home/pi/lametric/room.log 2> /dev/null

��room.log�����Ƥϰʲ��Τ褦�ʴ�����

date,temperature,humidity,co2 2020-07-11 13:50,27,68,513 2020-07-11 13:55,27,68,519 2020-07-11 14:00,27,68,507 2020-07-11 14:05,27,68,508 2020-07-11 14:10,27,68,505

�������ղ����Ƥߤ���

#!/usr/bin/env python3

import pandas as pd

import matplotlib.pyplot as plt

import re

import pprint

df = pd.read_csv('room.log', index_col='date')

period = int(-1 * (60 / 5) * 24 * 1)

ltst = df[period:].interpolate()

data1 = ltst.loc[:, 'temperature']

data2 = ltst.loc[:, 'humidity']

data3 = ltst.loc[:, 'co2']

xt = []

xl = []

idx = ltst.index.values.tolist()

for i in idx:

if '00:00' in i:

xt.append(i)

xl.append(re.search(r'(\d\d-\d\d) ', i).group())

elif '12:00' in i:

xt.append(i)

xl.append('')

else:

xt.append('')

xl.append('')

plt.style.use('seaborn-darkgrid')

fig, [ax1, ax2, ax3] = plt.subplots(3, 1, sharex='col')

fig.set_figwidth(12.8)

fig.set_figheight(9.6)

ax1.plot(data1, color='indianred')

ax1.set_ylabel('temperature')

ax2.plot(data2, color='royalblue')

ax2.set_ylabel('humidity')

ax3.plot(data3, color='seagreen')

ax3.set_ylabel('co2')

ax3.set(xticks=xt, xticklabels=xl)

plt.tight_layout()

plt.savefig('graph.png')

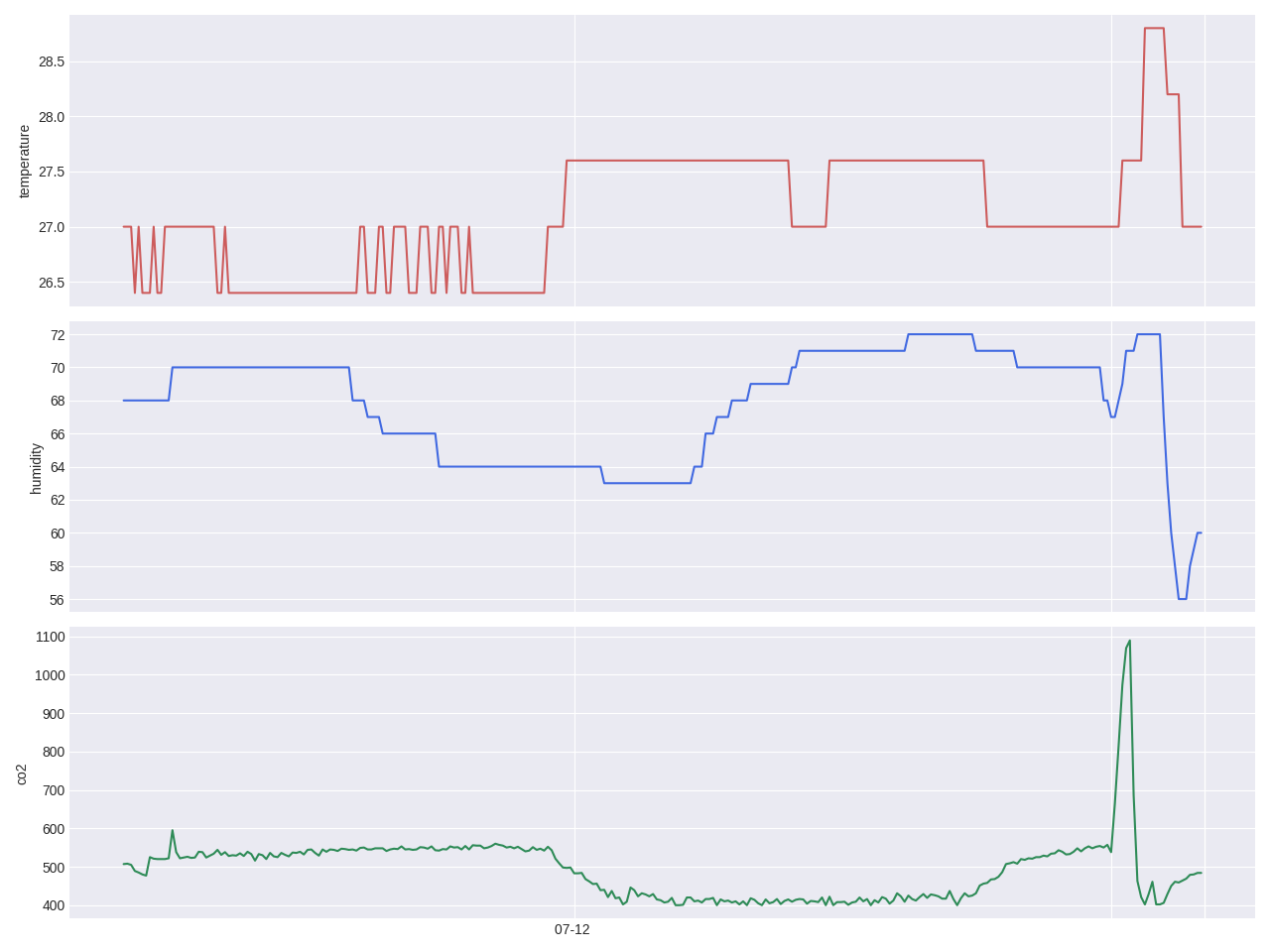

���ʲ����Ǥ�������ա�graph.png�ˡ�����ʬ�Υ���դǡ�9���ܤ��ѿ�period���ͤ���Ƥ��롢���դκǸ�ο�����������ɽ���Ƥ��롣

��12�����CO2ǻ�٤ε徺�ϡ����Ӥǥ�����˲Ф��դ�������Ȼפ��롣�����������ͤ��쵤�˲����ꡢ���˲��١����٤�߲����Ƥ��뤳�Ȥ����Ƽ��롣

��������ʤˡ��ä��äǤ�����

��

����

2020-7-11

�����CO2ǻ�٤���������³

��

���������������CO2ǻ�٤��������פ�³����

��2020/06/20��Banggood.com��ȯ������CO2ǻ�٥�����MH-Z19�פ�����

��������2020/07/10���Ϥ�����ȯ����������ޤ�20���֤����ä����Ȥˤʤ��Ǽ����10������30���ȤʤäƤ����ˡ�

���ʤ����Ϥ����Τϡ�MH-Z19B�פ��ä���

����®��������Υڡ����ͤˡ���Ȥ�ȶ�����������ξ����Nature Remo����¢�������ͤ�Lametric Time��ɽ�����뤿��˻ȤäƤ���Raspberry Pi 3B����³���Ƥߤ�����°������ξ���֤��Ƥ��뤿�ᡢ�����β��˥���ܡ����Ž���դ��Ƥ��롣

���������ͤ�����ʤ������Ǥ����Τǡ������Lametric Time��ɽ�����Ƥߤ���

#!/usr/local/bin/python3

import requests

import json

import datetime

import subprocess

url = 'https://api.nature.global/1/devices'

headers = {

'contentType': 'application/json',

'Authorization': 'Bearer xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx'

}

res = requests.get(url, headers=headers)

data = res.json()

hum = str(data[0]['newest_events']['hu']['val'])

temp = str(round(data[0]['newest_events']['te']['val'], 1))

mh = subprocess.check_output(['sudo', 'python3', '-m', 'mh_z19']).decode('utf-8')

mh = json.loads(mh)

co2 = str(mh['co2'])

print(f"{datetime.datetime.today().strftime('%Y-%m-%d %H:%M')},{temp},{hum},{co2}")

hum = hum + '%'

temp = temp + '��C'

co2 = co2 + 'ppm'

disp = {

'frames': [

{

'index' : 0,

'text' : temp,

'icon' : '12464'

},

{

'index' : 1,

'text' : hum,

'icon' : '12184'

},

{

'index' : 2,

'text' : co2,

'icon' : '32936'

}

]

}

disp = json.dumps(disp)

headers = {

'X-Access-Token': 'yyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyy',

'Cache-Control': 'no-cache',

'Accept': 'application/json'

}

url = "https://developer.lametric.com/api/V1/dev/widget/update/com.lametric.zzzzzzzzzzzzzzzzzzzzzzzzzzzzzzzz/1"

res = requests.post(url, disp, headers=headers)

���嵭�Υ�����ץȤ�cron��5ʬ�����˼¹Ԥ���ɽ�������������Ƥ��롣

��ɽ���Ͻ�ˡ����� -> ���� -> ���Υ�������ε��� -> ŷ���ȹ߿��� -> ��® -> ���� -> ���� -> CO2ǻ�� �ȤʤäƤ���ʥ�������ξ�����̤Υ�����ץȤǹ������Ƥ���ˡ�

���ޤ����������ʳ��β�ʪ�Ǥ�ʤ�����������

��

����

2020-7-5

Google���������˺���ȥץ�����������ݡ��Ȥ��뤿��Υ����쥤�ԥ�2020ǯ��

��

������2020/06/19�ˤȤ��Ȥ��ץ���夬���뤷��������ե���Ȥ��Ƥϡ�2020/07/05���ߡ���⥷������ä���ͤ����Ȥ�פ����������������Ϥ���Ȥ��ơ���ǯ�ʲ��Τ褦�ʥ�����ץȤ�Ҳ𤷤Ƥ�����

- Google���������˺�������������λ��������ݡ��Ȥ��뤿����쥤�ԥ�

- Google���������˥ץ�����������ݡ��Ȥ��뤿����쥤�ԥ�

- Google���������˥ץ�����������ݡ��Ȥ��뤿����쥤�ԥ�³

����®��ǯ��������Google���������˥���ݡ��Ȥ��褦�Ȥ��Ƥߤ��Τ��������줬��ľ��ư���Ƥ���ʤ���

���ʲ���ư�����ޤǤˤ�ä����Ȥ���

�������������������

���ޤ��ϥ�����ץȤν�����

- ����å�������bs�פ��ä��Τ���b�פ��Ѥ�äƤ��Τ��б���

- ǯ���2020�פˡ����ˤ���ǯ����§�����˱������ѹ�������

- ����������JERA�������������פ�ɽ�������Τ����뤵���ΤǾä�����

#!/usr/bin/python3

#coding: utf-8

#scrapingtigers.py

import re

import datetime

import urllib.request

import requests

import pprint

from bs4 import BeautifulSoup

data = {}

year = '2020'

team = {

't':'���',

's':'�䥯���',

'd':'����',

'h':'���եȥХ�',

'e':'��ŷ',

'f':'���ܥϥ�',

'l':'����',

'db':'DeNA',

'm':'���å�',

'b':'������',

'g':'���',

'c':'����',

}

head = "Subject, Start Date, Start Time, End Date, End Time, Description, Location"

print(head)

#month_days = {'03':'31', '04':'30', '05':'31', '06':'30', '07':'31', '08':'31', '09':'30'}

month_days = {'06':'30', '07':'31', '08':'31', '09':'30', '10':'31', '11':'30'}

for month in month_days.keys():

data.setdefault(month, {})

for day in range(int(month_days[month])):

data[month].setdefault(day + 1, {})

data[month][day + 1].setdefault('date', year + '/' + month + '/' + ('0' + str(day + 1))[-2:])

for month in month_days.keys():

html = requests.get("https://m.hanshintigers.jp/game/schedule/" + year + "/" + month + ".html")

soup = BeautifulSoup(html.text, features="lxml")

day = 1

for tag in soup.select('li.box_right.gameinfo'):

text = re.sub(' +', '', tag.text)

info = text.split("\n")

if len(info) > 3:

if info[1] == '\xa0' or re.match('JERA�������������', info[1]):

info[1] = ''

data[month][day].setdefault('gameinfo', info[1])

data[month][day].setdefault('start', info[2])

data[month][day].setdefault('stadium', info[3])

if re.match('�����륹����������', info[2]):

data[month][day]['gameinfo'] = info[2]

data[month][day]['start'] = '18:00'

text = str(tag.div)

if text:

m = re.match(r'^.*"nologo">(\w+)<.*$', text, flags=(re.MULTILINE|re.DOTALL))

if m:

gameinfo = m.group(1)

data[month][day].setdefault('gameinfo', gameinfo)

m = re.match(r'^.*"logo_left (\w+)">.*$', text, flags=(re.MULTILINE|re.DOTALL))

if m:

team1 = m.group(1)

data[month][day].setdefault('team1', team[team1])

m = re.match(r'^.*"logo_right (\w+)">.*$', text, flags=(re.MULTILINE|re.DOTALL))

if m:

team2 = m.group(1)

data[month][day].setdefault('team2', team[team2])

day += 1

for month in month_days.keys():

for day in data[month].keys():

if data[month][day].get('start'):

m = re.match(r'(\d+):(\d+)', data[month][day]['start'])

if m:

sthr = m.group(1)

stmn = m.group(2)

start = datetime.datetime(int(year), int(month), int(day), int(sthr), int(stmn), 0)

delta = datetime.timedelta(hours=4)

end = start + delta

sttm = start.strftime("%H:%M:%S")

entm = end.strftime("%H:%M:%S")

summary = ''

if data[month][day]['gameinfo']:

summary = data[month][day]['gameinfo'] + " "

if not re.match('�����륹����������', data[month][day]['gameinfo']):

summary += data[month][day]['team1'] + "��" + data[month][day]['team2']

#head = "Subject, Start Date, Start Time, End Date, End Time, Description, Location"

print(f"{summary}, {data[month][day]['date']}, {sttm}, {data[month][day]['date']}, {entm}, {summary}, {data[month][day]['stadium']}")

�����줰�餤�ν�����ư�������ʤ�Τ����ʴĶ���Window10��WSL��Ubuntu20.04�ˡˡ�

SSL routines:tls12_check_peer_sigalg:wrong signature type

�ߤ����ʥ��顼���Ǥ��ƻߤޤäƤ��ޤ���

�������ϡ�Ubuntu20.04�˥ǥե���Ȥ����äƤ�OpenSSL�ΥС�����Ť����ᡣ1.1.1f�����äƤ������������1.1.1g�ؾ夲��С�̵��ư���褦�ˤʤ롣�С�����åפ�apt�Ǥϥ���ǡ������������ɤ��饳��ѥ��뤹��ɬ�פ�����褦�ʤΤǡʾ��ʤ��Ȥ��Ϥ��������˥����äƤ������Ĵ�٤Ƥ�äƤ���������

����Ͻ��Ϸ�̤�csv�ե�������Ǥ��Ф��ơ������Google���������إ���ݡ��Ȥ����OK��

�ץ���������

����������Ѥʥ��顼��Ǻ�ޤ��줿�����Ķ��Υ��åץǡ��ȤǤϤʤ���������ץȤν����ǻ��ꤿ��

- ǯ���2020�פˡ����ˤ���ǯ����§�����˱������ѹ�������

- SSLǧ�ڤǥ��顼���Фʤ��褦���б����ɵ���

#!/usr/bin/python3

#coding: utf-8

#scrapingnpb2.py

import sys

import re

import datetime

import pandas as pd

import ssl

ssl._create_default_https_context = ssl._create_unverified_context

print("Subject, Start Date, Start Time, End Date, End Time, Description, Location")

year = '2020'

#months = ['03', '04', '05', '06', '07', '08', '09']

months = ['06', '07', '08', '09', '10', '11']

# 0, 1, 2, 3, 4, 5

#(0, '3/29�ʶ��', 'DeNA - ����', '��\u3000�� 18:30', nan, nan)

for month in months:

url = "http://npb.jp/games/" + year + "/schedule_" + month + "_detail.html"

tb = pd.io.html.read_html(url)

for row in tb[0].itertuples(name=None):

card = ''

md = re.sub(r'��.*��', '', row[1])

ymd = year + '/' + md

sttm = ''

entm = ''

place = ''

if row[2] == row[2]:

card = re.sub(' - ', '��', row[2])

if row[3] == row[3]:

place_time = row[3].split(' ')

if len(place_time) > 1:

(sthr, stmn) = place_time[1].split(':')

(mon, day) = md.split('/')

start = datetime.datetime(int(year), int(mon), int(day), int(sthr), int(stmn), 0)

delta = datetime.timedelta(minutes=200)

end = start + delta

sttm = start.strftime("%H:%M:%S")

entm = end.strftime("%H:%M:%S")

place = re.sub(r'\s+', '', place_time[0])

else:

sttm = '18:00:00'

entm = '21:20:00'

place = place_time[0]

if len(sys.argv) > 1:

m = re.search(sys.argv[1], card)

if m:

print(f"{card}, {ymd}, {sttm}, {ymd}, {entm}, {card}, {place}")

elif card != '':

print(f"{card}, {ymd}, {sttm}, {ymd}, {entm}, {card}, {place}")

������ϰʲ��Τ褦�ʥ��顼��Ǻ�ޤ��줿��

urllib.error.URLError: <urlopen error [SSL: CERTIFICATE_VERIFY_FAILED] certificate verify failed: unable to get local issuer certificate (_ssl.c:1108)>

����ǯ�Ȥΰ㤤�ϡ�NPB�Υ����Ȥ���https�פˤʤäƤ������ȡ�

���Ǥ⡢pandas�Υ������ˤ��ȡ��ʲ��Τ褦��pandas.read_html�ϡʤȤ�����lxml�ϡ�https�ˤ��б����Ƥ��ʤ���

pandas.read_html(io, match='.+', flavor=None, header=None, index_col=None, skiprows=None, attrs=None, parse_dates=False, thousands=',', encoding=None, decimal='.', converters=None, na_values=None, keep_default_na=True, displayed_only=True) Read HTML tables into a list of DataFrame objects. Parameters:io:str, path object or file-like object A URL, a file-like object, or a raw string containing HTML. Note that lxml only accepts the http, ftp and file url protocols. If you have a URL that starts with 'https' you might try removing the 's'.

���ʤΤǡ�������ץȾ��http�פ˥����������Ƥ���ΤϤ���Ϥ�����������Ϥ��ʤΤ������ʤ���SSLǧ�ڥ��顼�ˤʤäƤ��ޤ��ʥ�����¦��http�ؤΥ���������https�إ�����쥯�Ȥ��Ƥ뤿�ᤫ���ˡ�

���ǡ����������ʤ��Τǡ�SSLǧ�ڥ��顼��̵�뤹��褦�ˤ������衣

����Ϥ���ޤ����Ϸ�̤�csv�ե�������Ǥ��Ф��ơ������Google���������إ���ݡ��Ȥ����OK��

2020-6-29

Ŵƻ�ٱ����μ�������ѹ�

��

����ī����������ͽ���ŷ��ͽ��ʤɤ�Google Home�ˤ���٤餻�Ƥ��롣

���̶Ф˻Ȥ�Ŵƻ�α����ٱ����⤷��٤餻�Ƥ���Τ���������������2020/06/25�ʹߡˡ��ʤ�������٤�ʤ��ʤäƤ��ޤä���

�������ϡ��ʲ��Ρ�Ŵƻ�ٱ�����json�פ�API���ߤޤäƤ��ޤä����顣

�����Υ������ˤϲ��Υ��ʥ���ʤ������������դDZ��Ĥ���Ƥ�����ΤǤ��ꡢ��ͽ��ʤ����Ǥ��뤳�Ȥ�����ޤ��פȤε��ܤ⤢�뤳�Ȥ��顢�ޤ����������ʤ���

�����ޤǤ��꤬�Ȥ��������ޤ�����

���⤷�������顢���Ф餯�ԤäƤ�������褹��Τ��⤷��ʤ�������������ʤΤǡ��ߤޤäƤ��ޤä�API�Υͥ����Ǥ����Tetdudo.com��Ŵƻ������פΡ����Ծ����ȹ��������פΥڡ����Υե����ɡ�Atom1.0������

�����ٱ������������褦���ѹ����뤳�Ȥˤ�����

���ʲ���GAS��Google Apps Script�ˤγ����δؿ�����

������

function trainDelay() {

var url = "https://tetsudo.rti-giken.jp/free/delay.json";

var response = UrlFetchApp.fetch(url);

var json = JSON.parse(response.getContentText());

var text = "";

for (var i in json) {

if (json[i].name === "�巴��" || json[i].name === "������" || json[i].name === "�����綶��") {

text += json[i].name + "��";

}

}

if (!text) {

text = "Ŵƻ�α����ٱ����Ϥ���ޤ���";

} else {

text += "���ٱ䤷�Ƥ��ޤ���";

}

return text;

}

������

function trainDelay() {

var url = 'http://api.tetsudo.com/traffic/atom.xml';

var response = UrlFetchApp.fetch(url);

var xml = XmlService.parse(response.getContentText());

var ns = XmlService.getNamespace('', 'http://www.w3.org/2005/Atom');

var items = xml.getRootElement().getChildren('entry', ns);

var check = ['�巴��', '������', '�����綶��'];

var text = "";

for(var i in items) {

title = items[i].getChild('title', ns).getText();

for (var j in check) {

reg = new RegExp(check[j]);

if (title.match(reg)) {

text += check[j] + "��";

}

}

}

if (!text) {

text = "Ŵƻ�α����ٱ����Ϥ���ޤ���";

} else {

text += "���ٱ䤷�Ƥ��ޤ���";

}

return text;

}

��Ǻ����Τϡ��ʤ���XML�����ޤ��ѡ����Ǥ��ʤ��ä����ȡ������äƤߤĤ���������Υڡ����ε��ܤ��顢��������̾�����֤���ꤹ��ɬ�פ����뤳�Ȥ��Τ롣���ɤ�������

2020-6-20

�����CO2ǻ�٤�������

��

����

���ʲ��ε����ϵ���Ū�ˤϤۤȤ�����Ω���ʤ����ϼ��������դ�������ȴ������ϫ�˽����������̤Ǥ��롣

��

LaMetric Time

����ǯ������ä�LaMetric Time�ˡ�������ʾ����ɽ�����Ƴڤ���Ǥ��롣

����ľ���������ˤ�˳�������⤹�뤬���ޤ������䤫���Ȥ������Ȥǡ�

��ɽ�����Ƥ���Τϡ���ˡ�

- ����

- ���� ����

- ��������������

- ����

- ŷ���ʥ����������/��/�����ڤ괹���ˤȹ߿���

- ��®

- Nature Remo����¢��������

- ����

- ����

���Ƕ��������Ƥ�뵡�������������ˤ������Ƽ���Ķ��ˤ���褦�ˤʤä��Τ���������ޤ���ǯ��������ä�Withings���νŷ�WS-50��

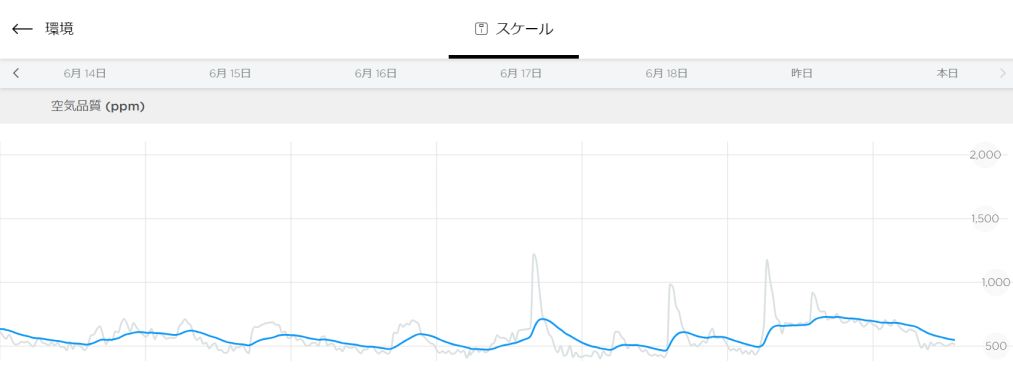

�����Ĥ�CO2ǻ�٥�������¢����Ƥ��뤳�Ȥ�פ��Ф������������Withings�ε���ξ������Ǥ��륵���Ȥ���⡢�ʲ��Τ褦��CO2ǻ�٤Υ���դ���ǧ�Ǥ��롣

��

������CO2ǻ�٤�LaMetric Time��ɽ����������

��

�������CO2ǻ�٤�1000ppm��ۤ���ȡ��ͤ�̲����Ф���ȸ�Ψ���礭��������Ȥ�����

������դ��CO2ǻ�٤�30ʬ�˰���¬�ꤵ��Ƥ���褦�ʤΤǡ������LaMetric Time�˾��ɽ�����������1000ppm��ۤ������֤�ɽ������ʤɤ��ƴ����ʤ����������������Ȥߤ��ۤ������ȹͤ�����

��

Withings API(OAUTH 2.0)

�������äƤߤ�ȡ�Withings������ξ�������API�����Ƥ��뤳�Ȥ��狼�ä���

���Ȥ����ϡ��ʲ��Υ����ȤǾܺ٤���������Ƥ��롣

���嵭�Υڡ����μ��ɤ���ʤ�ơ�WS-50�ξ�����뤳�Ȥ�����������

�����������Τ����νŤʤξ���ϼ���������CO2ǻ�٤μ�������狼��ʤ���

���������˻��褦�䤯���Ť����Τ����������������CO2ǻ�٤��ʤ��ΤǤ��롪 Withings API�β��⥵���Ȥ�������Υڡ����˷Ǥ����Ƥ��롢������������ɽ��褯�褯���Ƥߤ�ȡ�CO2ǻ�٤ι��ܤϤʤ���

���ʤ����ɤΤߤ�CO2ǻ�٤ξ����ʤ��Τǡ���ˤȤäƤϤɤ��Ǥ⤤���Τ���������API��Ȥ��ˤϥ��������ȡ�����ɬ�פǡ����줬30ʬ�Ǵ��¤��ڤ�Ƥ��ޤ������Τ��Ӥ˺�ȯ�Ԥ���ɬ�פ����롣���줬���������ݤ������Ƥ��ʤ�Ȥ��ˤ�����

��

Withings WS-50 Scale Syncer - Temperature & CO2

���������줺�ˤʤ��⥰���äƤ���ȡ��ޤ��˻�����ӤˤФä���Ȼפ���ڡ����Ĥ�����

���嵭�Υڡ����Ǥϡ�Withings WS-50 Scale Syncer - Temperature & CO2�Ȥ�����Withings���������API��Ȥä�WS-50�ε�����CO2ǻ�٤Υ������ͤ��ꡢDomoticz�Ȥ���OSS�Υۡ��४���ȥ������ƥ��Ϣ�Ȥ�����ġ���Τޤ�ή�Ѥ��Ƥ���Τ����������Ȥ�WS-50�Υǡ�����������Ф���Ǥ����Τǡ����Υġ��������뤳�Ȥˤ�����

#!/usr/bin/env python3

# -*- coding: utf-8 -*-

from datetime import datetime

import sys

import time

import hashlib

import requests

TMPID = 12

CO2ID = 35

NOW = int(time.time())

PDAY = NOW - (60 * 60 * 24)

HEADER = {'user-agent': 'Mozilla/5.0 (Windows NT 6.1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/41.0.2228.0 Safari/537.36'}

URL_BASE = "https://scalews.withings.net/cgi-bin"

URL_AUTH = URL_BASE + "/auth?action=login&appliver=3000201&apppfm=android&appname=wiscaleNG&callctx=foreground"

URL_ASSO = URL_BASE + "/association?action=getbyaccountid&enrich=t&appliver=3000201&apppfm=android&appname=wiscaleNG&callctx=foreground&sessionid="

URL_USAGE = "https://goo.gl/z6NNlH"

def authenticate_withings(username, password):

global pem

try:

import certifi

pem = certifi.old_where()

except Exception:

pem = True

requests.head(URL_USAGE, timeout=3, headers=HEADER, allow_redirects=True, verify=pem)

payload = {'email': username, 'hash': hashlib.md5(password.encode('utf-8')).hexdigest(), 'duration': '900'}

response = requests.post(URL_AUTH, data=payload)

iddata = response.json()

sessionkey = iddata['body']['sessionid']

response = requests.get(URL_ASSO + sessionkey)

iddata = response.json()

deviceid = iddata['body']['associations'][0]['deviceid']

return deviceid, sessionkey

def download_data(deviceid, sessionkey, mtype, lastdate):

payload = '/v2/measure?action=getmeashf&deviceid=' + str(deviceid) + '&meastype=' + str(mtype) + '&startdate=' + str(lastdate) + '&enddate=' + str(NOW) + \

'&appliver=3000201&apppfm=android&appname=wiscaleNG&callctx=foreground&sessionid=' + str(sessionkey)

try:

response = requests.get(URL_BASE + payload)

except Exception:

sys.exit("[-] Data download failed, exiting" + "\n")

dataset = response.json()

return dataset

def main():

username = 'mail@address'

password = 'password'

deviceid, sessionkey = authenticate_withings(username, password)

co2data = download_data(deviceid, sessionkey, CO2ID, PDAY)

for item in sorted(co2data['body']['series'][0]['data'], key=lambda x:x['date'], reverse=True):

dt = datetime.fromtimestamp(item['date'])

print(f"date:{dt}, co2:{item['value']}")

return

if __name__ == "__main__":

main()

���嵭�Υ�����ץȤǤϥǥХå��Ѥˡ�¬�������Ȥ��λ���CO2ǻ�٤��Ԥˡ�����ʬ�������ɽ�����Ƥ��롣

date:2020-06-20 13:30:13, co2:509 date:2020-06-20 13:00:12, co2:519 date:2020-06-20 12:30:13, co2:506 date:2020-06-20 12:00:12, co2:497 date:2020-06-20 11:30:13, co2:509 �ġ�

������Ϥ���¬��������줿�ǡ������Τޤޤ�unixtime��ɽ�����Ƥ������ᡢ�ʤ��ʤ����Ť��ʤ��ä��Τ��������Υ�����ץȤ�¹Ԥ��Ƥ⡢�ǿ��Υǡ����Ȥ��Ƽ���Τϡ��¹Ի��β����֤����Τ�Τ��ä��ΤǤ��롣

������ϰ��Τʤ��ʤΤ���

���������˻��褦�䤯���Ť����Τ��������ҤΡ�Withings WS-50��Ȥä�CO2ǻ�٤�CloudWatch��ȥꥯ������¸�����פΥڡ����ˡ��������Ƭ��ʬ�ˤ��ä���ȡ��ʲ��Τ褦�˽Ƥ��롣

��������WS-50�ξ�祻������������ʲ���2�ѥ������ʤ����ᡢ�ꥢ�륿�����CO2ǻ�٤�������뤳�ȤϤǤ��ʤ��� ��1�����ɤ����Υ����ߥǴĶ��������CO2ǻ�١����١ˤ����� ���νŤ�¬�ä������ߥǴĶ������������ �ʤ���CO2ǻ�پ����30ʬ�����˵�Ͽ����Ƥ��롣

���ĤޤꡢCO2ǻ�٤�¬�꼫�Τ�30ʬ��˹Ԥ��Ƥ���Τ��������줬�����־�˥��åץ����ɤ����Τ��ν�¬������������٤Τɤ����Υ����ߥΤߡ�������������˹ԤäƤ⡢��������������Τϰ������٤������嵭������ץȤ�ɽ�������Τϡ�����ι������ξ��ǿ��ˤʤ�櫓�ǡ����줬������ץȼ¹Ի��β����֤������ä��ΤǤ��롣

����ɡ�WS-50��¢��CO2ǻ�٥����ϡ��䤬���ꤷ�����ӤǤϻȤ��ʤ��Ȥ������Ȥ��狼�ä���

��

MH-Z19

������Ǥ⤢������ʤ��䤬�ɤ��������Ȥ����ȡ�Banggood.com�ǰʲ���CO2ǻ�٥���MH-Z19��ȯ�����Ƥ��ޤä���

���ʤ�Amazon�ǤϤʤ�Banggood����ä����Ȥ����ȡ�Banggood���������ʤ�¤�Ǽ����û���ä����顣����Ǥ�Ǽ����10������30���塣���ʤ��������ߤ�2529�ߡ�

��CO2ǻ�٥������Ϥ����ޤǡ����ξ�Ǯ�������Ƥ��ʤ�����ɤ�����